Anyone who owns a Synology NAS will sooner or later experience a failure. Be it that the entire NAS unit no longer works or only one or the other hard drive has given up the ghost.

In the following case, however, there was the partly known Synology blinks blue LED error.

The NAS, in our example a RS2212+ unit, was still reachable by ping during operation. Access to the mounted iSCSI volume was also still possible, but a monitoring system reported that the status of this unit had changed.

When checking via the web interface, it already did not go further.

The web interface was no longer available.

Also the login area of the web interface was not displayed at all.

SSH/Telnet access is disabled by default and therefore could not be reactivated on the Synology NAS. So the diskstation had to be restarted manually.

Normally a Synology NAS shuts down automatically after a beep sound when the power button is pressed and held. In this case, even that didn’t work anymore and you were forced to hard shut down the NAS system.

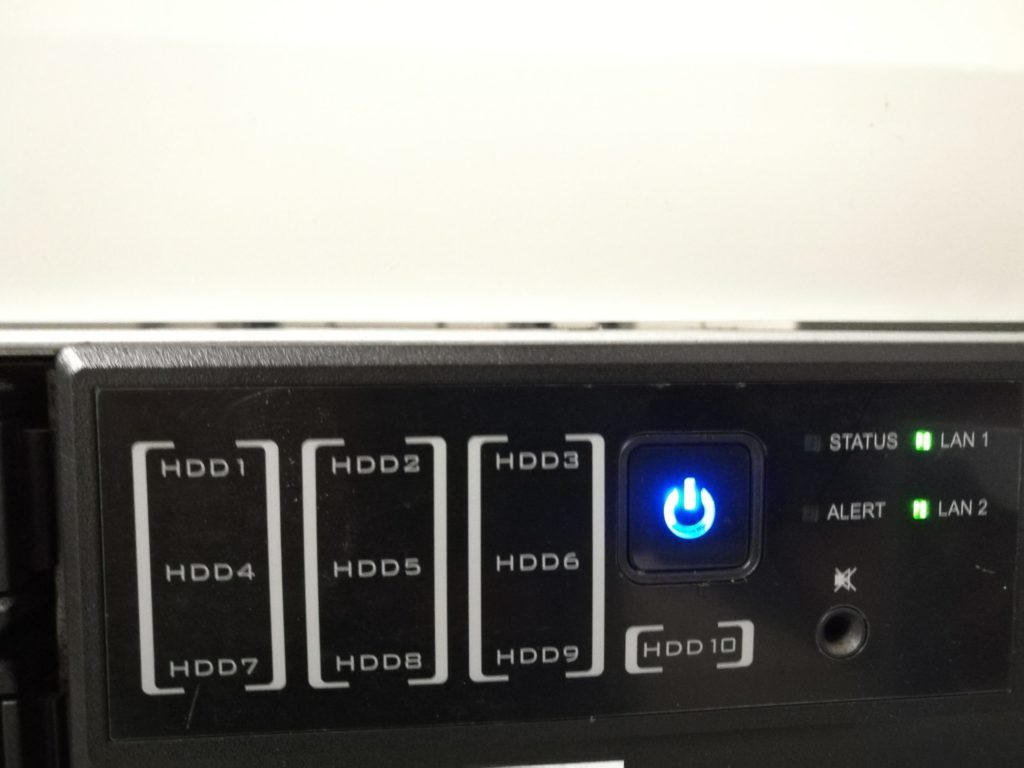

When the system was restarted, the following picture appeared.

The power button permanently blinked blue and the two LAN port LEDs displayed traffic. However, the beep sound did not sound after a few minutes as usual, indicating that the system had booted successfully. All LED’s on the hard drive bays also remained off and showed no reaction.

Troubleshooting the Synology NAS (Synology blinks blue Error)

Troubleshooting was a bit difficult here, since no terminal session to the device could be established and no console port cable was available. In the local manufacturer forums, there was always talk of a defective power supply, a defective hard drive or even the motherboard.

To get to the bottom of it, I did the following. First I disconnected the NAS from power and waited a few minutes. Then I pulled all the hard drives out of the bays and restored power. After a startup without hard disks at least the status LED came up with an error beep.

In this status I then put the hard disks back into the bays one after the other. The LEDs of the hard disks lit up briefly, but the NAS was still not reachable via its IP or went out of error mode.

A reboot including the inserted disks resulted in the picture from the beginning again. A flashing blue LED with no reaction from the system and no active LEDs on the hard disk bays.

It had to have something to do with the hard disks. Afterwards, we plugged in each hard disk individually and looked at how the system behaved. Fortunately, the system reacted differently as soon as the hard disk was pulled into slot 1.

After the hard drive in slot 1 was replaced, the NAS had to be searched and set up again in the network using the Synology Assistant tool.

Diskstation operating system lost

But why did the system not start up again, and why did the Synology blinks blue?

The cause was that the hard drive in slot1 was defective and the operating system of the Synology Diskstation is stored here. Even if a RAID was set up on the NAS, the operating system partition remains on this single hard drive. If this fails, no access to the web interface is possible and even a reboot of the system does not bring about a boot process.

How To Win Friends And Influence People (Dale Carnegie Books)

7,15 € (as of 24. April 2025 09:30 GMT +02:00 - More infoProduct prices and availability are accurate as of the date/time indicated and are subject to change. Any price and availability information displayed on [relevant Amazon Site(s), as applicable] at the time of purchase will apply to the purchase of this product.)Unfortunately, the LEDs of the individual hard disk bays were also no longer activated. The troubleshooting was a bit tricky.

After the NAS was set up again with the Synology Assistant Tool and the latest operating system was installed, the remaining disks could be checked for SMART errors.

In this case, unfortunately, more than just one hard disk was defective and the RAID 6 was therefore invalid.

In this case, it is only worth rebuilding the RAID and restoring the data from a backup.

Conclusion

It shows that Synology should make some adjustments here. The whole thing was tested with an old RS2212+. Whether this also behaves with the newer Synology NAS systems, I can not judge.

I would welcome it, however, if you could store the operating system on at least 2 disks in copy mode and the boot loader would use simple always slot 1 + 2 as boot order. So if the primary hard disk in bay 1 fails, the bootloader could boot from bay 2.

Alternatively, I would also welcome the possibility to move the operating system to the RAID volume after creating a RAID.

Leave a Reply